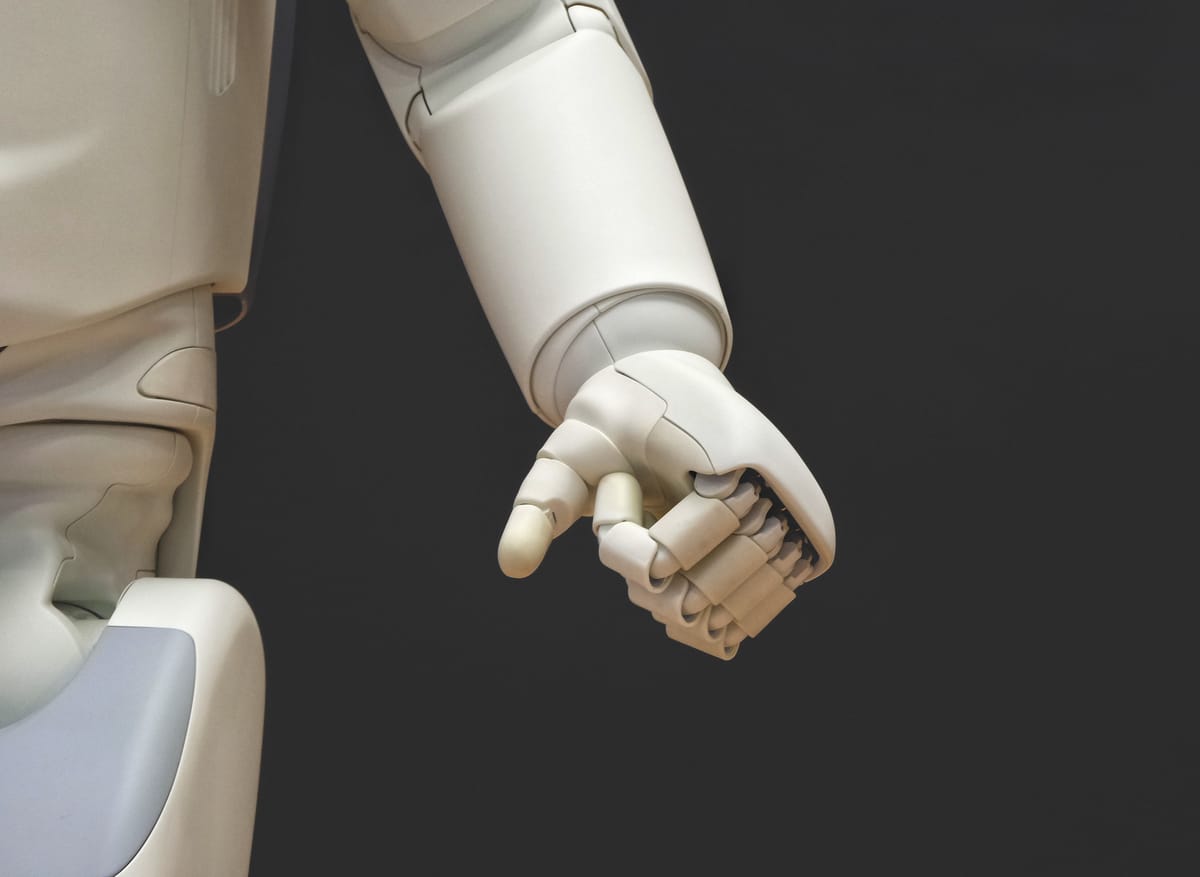

Autonomous Killer Robots: Be Very Afraid

The technology of warfare has always been evolving, but institutions are pushing boundaries that should remain untouched... and Imperial College is one of them.

A wise man once said “Your scientists were so preoccupied with whether or not they could, they didn’t stop to think if they should.”

Given the content of this article, you may be thinking that I should have opened with a quote from the Terminator franchise instead of Jurassic Park, but I chose that quote because it succintly captures the conclusion of my article.

Lethal Autonomous Weapons (LAWs), or Killer Robots if you wish, are not currently in use but countries across the globe are developing software and robotics for LAWs.

Technology has been advancing the nature of warfare for centuries; unmanned combat drones are an example of advanced weapons that remove the human from the battlefield. Although a human is still ‘in the loop’, as they call it, such technology is still subject to scrutiny and unease despite some of the strategic benefits. In fact I was recently watching a Jubilee discussion on YouTube between USA war veterans, and a question cropped up on the use of drones; all but one veteran were clearly hesitant about their use. The veteran who was ‘for’ drone warfare passionately argued that the potential to save soldiers from psychological and physical harm outweighed his concerns. So if wars are inevitable for the future, should humans remain on the frontlines when AI could make killing targets more efficient?

Regardless of the supposed benefits, I believe that LAWs have no place in our future. It is unwise to hand the ‘decision to kill’ over to machines that only see humans as data, and one can only hope that such weapons wouldn’t be used outside of combat or against civilians. Furthermore, I do not believe that distancing ourselves from war by replacing humans with robots will act as a deterrent to conflict in any way - I think it would do the opposite.

There are many valid reasons why LAWs should not be used, with the Campaign to Stop Killer Robots (CSKR) summarising the consequences of LAWs implementation as follows: Digital dehumanisation, algorithmic biases, loss of meaningful human control, lack of human judgement and understanding, lack of accountability, inability to explain what happened or why, lowering the threshold of war, and a destabilising arms race.

CSKR is a coalition of NGO’s across the world who seek to put an international ban on LAWs before they are fully developed. They reached out to Felix with the findings that there are research projects running at Imperial College London that may be used in LAWs systems. Unsurprisingly, there is very little transparency regarding these projects and a FOI request made by CSKR was rejected. Mariana Canto is a CSKR campaigner who wrote an article on Imperial’s links to LAWs, her article is available via the link to the right.

I believe many of us came to Imperial because we were attracted by the idea of studying at an institution that pushes the boundaries within STEM. But for the sake of our future, we need to stop right now and ask whether we fully comprehend the implications of such ‘innovation’.

To hold Imperial accountable, please read, share, and sign the open letter written by CSKR below.

SIGN THE OPEN LETTER

Dear College Leaders,

It is with great concern that we, (the undersigned), are writing to you in order to ask Imperial College London to take a stand and ensure that the society we live in is one in which human life is valued – not quantified. We write to you to express our deep apprehension with regards to the role of Imperial in furthering the development of lethal autonomous weapons (LAWs) systems, also known as “killer robots”.

Imperial is considered a world-class research university that has as one of its main missions to benefit society through excellence in science, engineering, medicine and business. Moreover, in its 2020-2025 Strategy, it was stated that the institution would be focusing on “finding appropriate partners and establishing valuable and impactful collaborations” and “underpin this through ethical principles of engagement.” Imperial also highlighted as one of its main goals for the next years, the importance of “finding new ways to collaborate and to drive positive real-world outcomes.”. Based on these goals established by Imperial, it is expected that the institution will take a stand against the development of technologies that diminish the importance of human life by delegating the decision to take a life to a machine.

The lack of moral reasoning necessary to evaluate the proportionality of an attack, where human life is reduced to merely a factor within a predetermined computation is worrying. Thee Imperial College London also affirms in its official Strategy that it will “create platforms for research, capacity-building and knowledge exchange to achieve social and economic goals, including tackling the UN Sustainable Development Goals”. Therefore, it is important to emphasise that one of the 17 UN SDGs is the promotion of “peaceful and inclusive societies for sustainable development” and that the UN Secretary General has described the use of autonomous systems to take human life as “morally repugnant” as a machine is incapable of exercising discretion, and lacks the compassion and empathy needed to make morally complex decisions. Lethal autonomous weapons are incompatible with international human rights law, namely the Right to Life (‘no one shall be arbitrarily deprived of life’), the Right to a Remedy and Reparation, and the principle of human dignity.

It is of utmost importance that Imperial College London consider the risks of this lethal technology that is being developed with the aid of Imperial’s research centres. It is highly likely that, in the near future, autonomous weapons will expand into public life, beyond war zones. Autonomous weapons could be used for border control, policing, and upholding oppressive structures and regimes. Therefore, besides loss of meaningful human control, algorithmic biases and the inability of explaining the reasoning behind an automated decision will put not only men of colour, of ‘military age’ in danger but also civilian groups of people of colour, women and non-binary people. Both manners of mistakes compound structural biases.

An investigation conducted by the Stop Killer Robots campaign has found that the Imperial College London is contributing to the development of lethal autonomous weapons through military-funded research collaborations, close relationships with commercial LAWs developers, and through the encouragement of student recruitment to LAWs developers. Findings of particular concern include multiple ongoing projects developing LAWs technologies, partnered with the defence industry and its collaborators. Projects based in Centres for Doctoral Training and other laboratories at Imperial are developing sensor technologies and Unmanned Aerial Vehicles (UAVs) in collaboration with well-known LAWs companies such as BAE Systems and Rolls-Royce.

These activities amount to the active endorsement of lethal autonomous weapons systems by Imperial College London, demonstrating a complete disregard for human rights and dignity. We urge College to take substantial action to prevent the further advancement of lethal autonomous weapons capabilities. We therefore call upon Imperial to:

- Cease all activities directly contributing to the development of lethal autonomous weapons. The College must release a statement acknowledging the harms it has enabled through its research activities into LAWs technologies, committing to no further activities contributing to the development of LAWs. This must be established in formal policy.

- Cease the encouragement of student recruitment to LAWs developers. The University must not allow LAWs developers access to students for the purposes of recruitment, nor must it allow student engagement with LAWs developers through workshops, talks or research projects.

- Take proactive measures to ensure researchers and students are made aware of all possible applications of their research. Imperial must set out concrete plans to improve awareness around the potentially harmful uses of its members’ research.

- Take proactive measures to safeguard against the unethical usage of its members’ research output

- Publicly endorse a ban on lethal autonomous weapons by signing the Future of Life Pledge. Found online at the link at the end of this article. More than 30 countries in the United Nations have explicitly endorsed the call for a ban on lethal autonomous weapons systems. Now is a timely moment for UK institutions to show support for this ban and urge the UK government to do the same. We demand Imperial College London accepts its responsibility for the role it has played in the development of lethal autonomous weapons, and instead use its influence to affect positive change with respect to human rights and dignity.

If you want to learn more or take further action, here are some options:

- Share this open letter across Imperial via emails/social media/word of mouth

- Attend a free online CSKR workshop ran by CSKR campaigner Mariana Canto on Wednesday 15th December, sign up at the following Eventbrite link: https://www.eventbrite.com/e/hasta-la-vista-humanity-am-i-developing-killer-robots-tickets-217733024337

- Sign the Autonomous Weapons Pledge by the Future of Life Institute linked here: https://futureoflife.org/2016/02/09/open-letter-autonomous-weapons-ai-robotics/

- Visit the CSKR webpage for more information here: https://www.stopkillerrobots.org/