Engineering tomorrow’s humanity

Feroz Salam on the interdependence of man and machine

Every week in here at Technology, we try and peek a few weeks into the future, to tell you what might be the best new technology of the coming season or the device that you’re going to be saving up for. The far future is often best left to science fiction; technological predictions about the future have a hairy way of coming back to bite you.

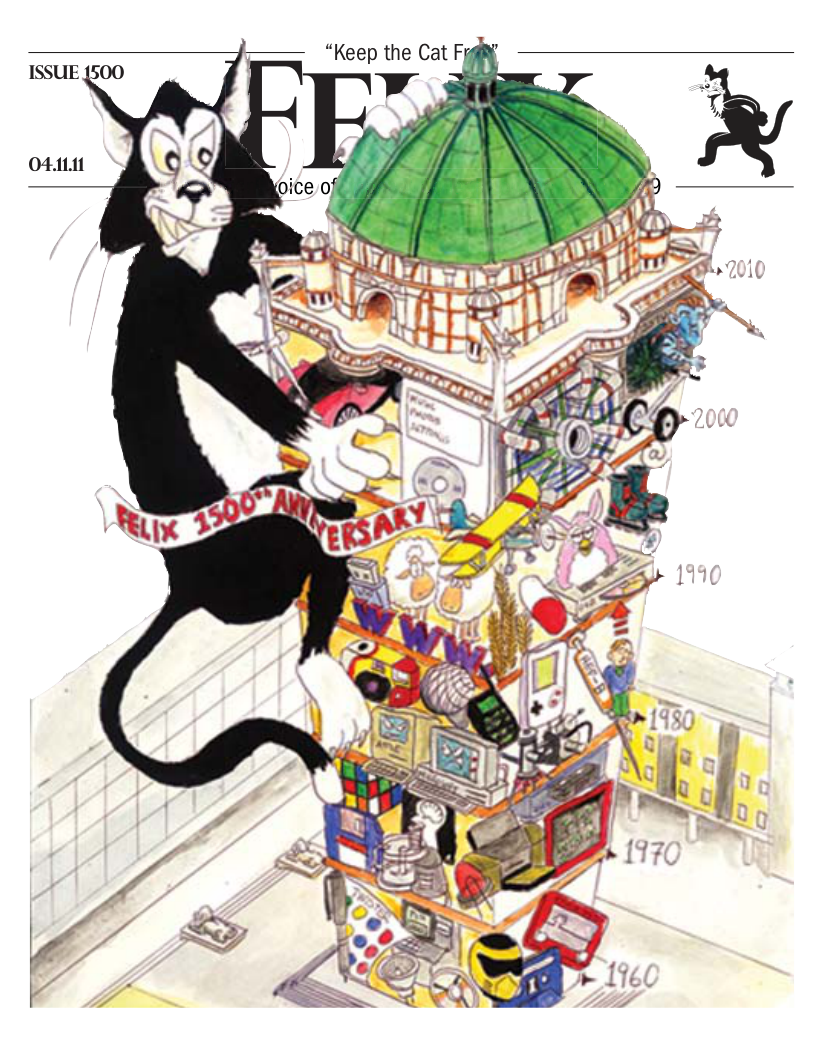

Despite this, looking into the future offers a tantalising glimpse into the world that we would like to see. More than ever, science is pushing the boundaries of what we thought was achievable – our relationship with the devices we have built grows ever more symbiotic, and rudimentary though the technology may be, electronics and biology can now communicate with each other. It’s this combination of factors that’s leading to a growing discussion of the concept of an ‘augmented humanity’, a Deus Ex-eqsue combination of the benefits of human creative thought and raw mechanical power. Exciting and scary, definitely. Yet how real is this vision, and where are we on the road towards it? More importantly, what sort of implications does it have for us as a species? Finally, will Felix 3000 be written by cyborgs?

Engineering our bodies

A cornerstone of this symbiosis will lie in bioengineering: getting computers to work seamlessly with our intelligence. This would mean that the distinction between man growing ever more blurry, our dependence on them and their dependence on us making any differentiation pointless. Considering how much electronics drive our everyday lives even today, it’s hard not to see this happening.

Despite this, we still see computers around us as disparate objects, subordinates regardless of our keen dependence on them to maintain our living standards. Bioengineering might change that dynamic, should its current breakneck rate of progress continue (bioengineering research is currently one of the fastest growing scientific fields, with CNN ranking it the number one field for job growth in the next ten years). One key focus of bioengineering research revolves around human biological enhancement and brain-computer interfacing, two topics that were until recently the preserve of science fiction.

Brain-computer interfacing is defined as a direct pathway between the brain and an external device (using invasive surgery, MRI or EEG). Given the complexity of the work they carry out, the progress made by bioengineering labs over the last twenty years is truly amazing. Cochlear implants (a surgically implanted device that can give a sense of sound to the profoundly deaf) have a history almost as old as the field, and have shrunk from being bulky, painful and risky to being ‘totally implantable’ devices that interact with the brain without the help of any external interface.

In addition, work being done on replacement prostheses for those who have lost limbs has garnered a lot of attention in the media due to the remarkable dexterity that they offer wearers; those who have lost entire hands are suddenly able to manipulate individual bionic fingers simply by the power of thought. Making this work is a complicated dance of the sciences – materials that have to be lightweight and durable, electronics that have to be miniaturised without losing reliability and complex machine learning algorithms that have to work on a processor that’s probably your mobile phone’s poorer cousin.

From the lab to our hands

Moving from the current state of affairs to the imagined state of singularity of man and machine, however, means taking these devices to an entirely new level of functionality. Most of today’s implants are rough around the edges and largely dependent on bulky external power sources. A truly augmented humanity is a long way away – where will we be in 50 years time? If current research is any indication, not too far away from the vision. Recently, a study by Berkeley researchers showed that patterns generated by monitoring brain activity of people watching videos could be used to create a fairly accurate reconstruction of what they were watching (think dream-recording). The potential for this technology, when improved, is massive. Storing your thoughts right from source could prove the best dictaphone imaginable, and could allow us to tap into and transfer images from the most realistic cameras we have: our eyes.

From the intangible back to the firmly physical, further research in materials and optimizations to existing prosthetics is slowly bringing us commercial ‘exoskeletons’, mechanical frameworks that can be worn over the body and provide incredible amounts of extra power, essentially an Iron Man suit for the real world. First practical trials of these skeletons in the American military are expected in 5-10 years. 50 years will probably see these devices coming out for the mass-market, helping us with everyday lifting, moving and general endurance.

Looking towards the past is always a fairly good indicator of what we can expect from the future. 50 years ago saw the first prototype of what we would come to call the internet and the first demonstration of the computer mouse. This decade has seen the first practical demonstrations of brain-computer interfaces and mightily powerful prosthetics that can extend the reach and power of both the human mind and the body. Most predictions expect singularity in the 21st century, and this doesn’t seem too idealistic. If you are still in doubt, take a look at the world around you. In a world where we have to debate whether a disabled runner with a prosthetic leg has too much of an advantage over able-bodied sprinters, the boundaries between man and machine are far smaller than you would imagine. Felix 3000 won’t see the rise of the machines, but it will probably see us forgetting that we feared them in the first place.