AI geek bests human rivals

Wordplay anyone? Or may be a silicone based GP?

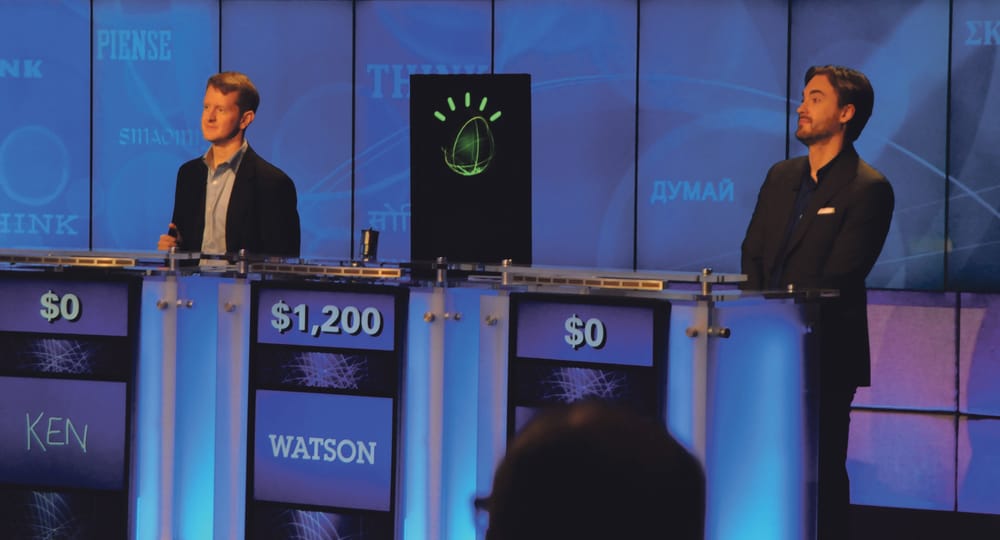

If you have an issue with a silicon based master race, you might want to start hunting around for a welcoming hippie commune, because things just got serious. In three rounds of the American game show Jeopardy, based on participants mastering wordplay to answer general knowledge questions, a pair of skilled human contestants were roundly defeated by a machine – a testament to the distance artificial intelligence has come since chess Grandmaster Gary Kasparov’s epic six game battle with Deep Blue in the late 90’s.

The conclusion of half a decade’s efforts, the victory by IBM’s ‘Watson’ AI program is a massive achievement, one that was considered virtually impossible in 2005. The complexity of Watson’s system lies not in the knowledge of trivia; Watson has been endowed with a 4 terabyte data repository (that’s roughly 512 Wikipedias, for reference).

Conquering the complex wordplay that the game show is based on, often requires contestants to draw together pop culture references, puns and even slang to merely understand the question. For example, ‘This term for a long-handled garden implement could also be used to describe an immoral pleasure seeker’. (The answer, if you are wondering, is not ‘What is a hoe?’, but ‘What is a rake?’. Look it up on Wikipedia.)

Watson proved to be more than able at this task, breezing through the three rounds having amassed a grand total of £47,923 (£30,000 more than his nearest human challenger). At each stage when the question was revealed to the contestants, a text file containing the question was sent to Watson. On opening the file, Watson would run through a series of algorithms in parallel to try and discover the answer, with his confidence in his response dependent on the number of algorithms that came to the same conclusion.

Watson’s circuitry meant that he could often buzz in dramatically faster than his two distinctly fleshier opponents, and his vast data bank was optimised solely for the purpose of playing Jeopardy

How Watson compared to his human rivals is a matter of some debate. Watson’s circuitry meant that he could often buzz in dramatically faster than his two distinctly fleshier opponents, and his vast data bank was optimised solely for the purpose of playing Jeopardy. Yet in his defense, he had none of the decades-long training in natural language that Jeopardy champions and opponents Ken Jennings and Brad Rutter enjoy simply by being human. In that aspect, it’s probably fair to argue that Jennings and Rutter were playing a completely different game to Watson; the former testing their trivia skills while the latter tested his English language skills.

So what does IBM have planned for the young linguistic master now that his Jeopardy days are over? Watson more than served his original purpose, convincing the US public that IBM are still at the forefront of solving some of the world’s toughest computing problems. Yet running Watson as is in any other situation would probably be highly impractical. For the moment, IBM has announced that they will be exploring the use of similar software to aid medical diagnoses and legal research. The truly exciting possibilities of the software lie well in the future though - many personal computers today can play more efficient chess games than specialist chess computers of the 90s.

For a six year old idiot savant whose only skill is an American game show, Watson’s domineering performance has served to bring AI and robotics back to the forefront of our imaginations but his legacy will lie in how far his performance goes towards bringing computers that understand the intricacies of speech into our homes. We’re used to chess-playing computers, but if things go to IBM’s plan it may be time to prepare for your GP being a copper and silicon box somewhere in Iceland.