Law and Order: GPT

Nefarious AI use and what we’re not doing about it.

t’s easy to limit our understanding of AI to headlines and scandals, in both the good and the bad. Gaining a deeper knowledge of what it means to be human feels existential and daunting in a world where so many things seem to be going wrong. It’s so much simpler to focus on the immediate, obvious chaos around us instead of the looming, indistinct threats arising.

It’s certainly what the UK government is happy to do: espousing the benefits of an “international” response at the Bletchley conference (the first major meeting of international leaders on AI regulation), whilst following it up with little to no actual policy, and firefighting with warnings and Ofcom investigations when AI slop hits the fan.

With the demeanour of an arthritic, hibernating bear, Starmer’s government will only react when poked. When Grok, the integrated AI of X, began generating sexually explicit images of real people – including children – the government was quick to react, condemning X and outlining how current and future legislation outlaws such offences. This did lead to the restriction of Grok’s image generation capabilities and has likely set a precedent for future cases of AI-based image abuse.

However, this does not go far or deep enough. When the Government limits itself to reaction-based policy, acting almost entirely according to public and media attention, huge issues fall through the gap.

Reality perception online

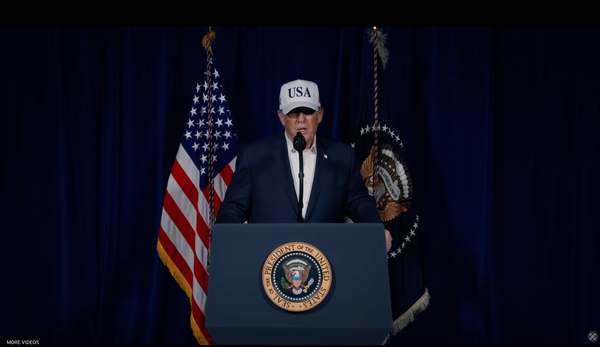

In early January this year, the official White House X account posted an image of an anti-ICE protester, Nekima Levy Armstrong, being arrested. It was later uncovered that this image had been altered to show her in much more distress than in the original. This practice is not illegal, and the account never explicitly claims that the image is even accurate; however, that does not make it any less damaging. This subtle bending of our reality online is insidious, a new kind of propaganda that doesn’t loudly declare its intentions but rather makes a few minor changes aimed at enforcing already existing biases, whilst remaining under the radar for a casual viewer.

This is not the first, nor will it be the last, instance of AI being weaponised for a political aim, all without breaking a single law.

Cart rules for motor cars

The reliance of the UK government on existing or slightly modified legislation to monitor AI based crime is akin to regulating cars on the road based on laws for wagons and horses: attempting to fit old legislation to a new technology that functions in a completely new manner, with new capabilities and a power that far outpaces its predecessors.

The existential ability of Grok, and other models like it, to modify our collective understanding of the online world is not a niche topic: it’s hard to spend more than 30 minutes on any social media app without encountering an account outlining the perils of AI, or a generated video just close enough to reality you almost believe it. Yet this growing understanding seems to have stopped short of the halls of power. Starmer and his government appear unable to grasp the true depth and breadth of the risks we face, be that due to a political system stuck somewhere in the 2008 crash, grasping for growth beyond reason and unwilling to address AI as more than a passing fad; or a genuine apathy towards the development of technology and our relationship to it beyond the next election.