Policy in a Strange Land

UK Government policy and our reality online in an age of AI.

It’s no secret that AI is one of the fastest growing technologies of our age, both in terms of its capabilities and integration into mainstream culture. This rapid and widespread expansion calls for comprehensive legislation paired with reactive, competent leadership. Recent events on X serve as an excellent study on the intersection of AI and policy, what has worked well so far, and what is left to learn.

Grok

Grok is the most well-known product of xAI, first released in 2023 and fully integrated into X (formerly Twitter). Grok inherited its name from the 1963 novel “Stranger in a Strange Land” by Robert A. Heinlein, in which it signifies deep, empathetic understanding.

This is perhaps a nod to Grok’s large general knowledge set, humanistic qualities, and seamless integration in the culture of the app, where the unique use of live content from X as a training data set results in a comprehensive base understanding of the content of the app.

How it works

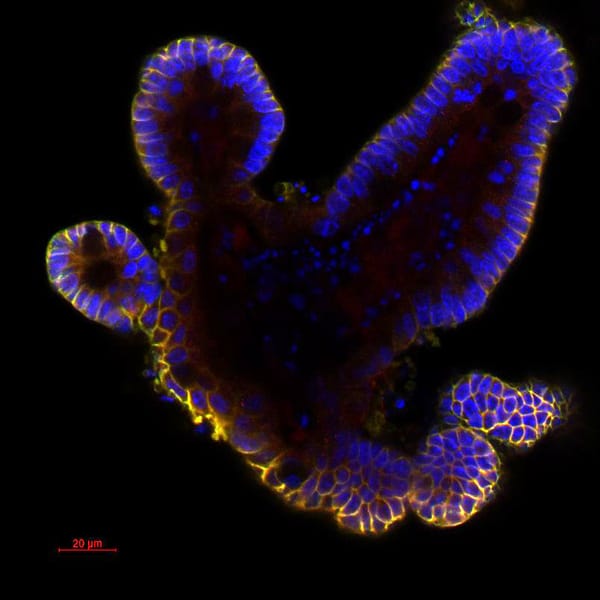

One of Grok’s most infamous features is its image generation capability, currently supported by Aurora, an autoregressive mixture of expert models.

In simple terms, this means it predicts the next element or “token” in a sequence based on the section of the sequence that has already been generated and the context of the dataset it has been trained on. To do this, it uses a Mixture of Experts model: a smaller range of specialized “expert” networks, of which only a few are used at once (selected by a gating network designed to select the right network for the task), as opposed to using a dense network, which runs the whole network for each input.

This efficiency results in the computing power that makes large language models like Grok cheaper and more accessible.

Crime and consequences

A few months after its introduction, in late 2025, Grok’s image generation capability caused uproar when X users began to generate explicit images of real people, including children, and post them online.

The British-American Centre for Countering Digital Hate estimates that over three million photo-realistic images of a sexual nature were created in an 11-day period.

In line with the public outcry and media attention, the Government reacted in kind: in a speech made in the house of commons on the 9th of January, technology secretary Liz Kendal outlined the fact that the generation and distribution of these images is illegal under the Online Safety Act (2023), and further specific banning of “nudification apps” would be present in the Crime and Policing bill (which as of the 27 of January is under review in the house of commons).

This response resulted in X restricting use of the function on the 9th of January to paid subscribers before removing the ability altogether on the 15th. An ongoing Ofcom investigation into X role and responsibilities in the issue was also independently initiated.

Future Outlooks

This response represents the first steps in what is likely to be a long and arduous journey tackling the existing issues exacerbated by AI, and the completely new problems that we may face.

As AI image generation begins to encroach on our lived reality online, striking balance between educated and nuanced policy, as well as personal responsibility and education on the content we consume online will be vital.